It seems my latest obsession is also Wall Street’s. AI stock trading is having its moment in the sun.

Hedge funds and retail investors are racing to apply large language models (LLMs) like ChatGPT to predict stock movements, hoping to gain an edge.

However, many folks’ limited success points to a crucial misunderstanding of AI.

So, I thought I’d connect some dots for you today.

Picture this.

You fire up your shiny new AI bot. It can write poems, debug code and draft emails. Surely it can predict whether Tesla will go up tomorrow?

After all, it’s brilliant at predicting the next word in a sentence. Why not the next tick in a stock chart?

A Zipf-y Mystery

Let’s first establish that large language models like ChatGPT don’t ‘think’. They don’t ‘know’.

Really, they’re glorified guessers.

Their amazing trick is something called ‘Autoregression’, a fancy word for guessing the next thing in a sequence. You’re pretty good at it yourself.

If I say, ‘Once upon a…, you’d safely guess the next word is ‘time’.

These models are doing the same, but on a mind-boggling scale, and to great success.

But things break down when we move from fairytales to the chaotic nature of finance.

Why does this work in language?

Because it has a structure. Better described in words like topic, grammar, or syntax. But it doesn’t end there.

You may think you understand this conceptually, but let me try to bake this into your brain.

You see, words and letters follow a deeper structure. And we don’t know why.

Even the words and letters used in this article, hell, in any article (and any language), follow something called Zipf’s law.

The law appears in many different domains, including language, biology, and economics. No single theory explains its ubiquity.

So what does the law say?

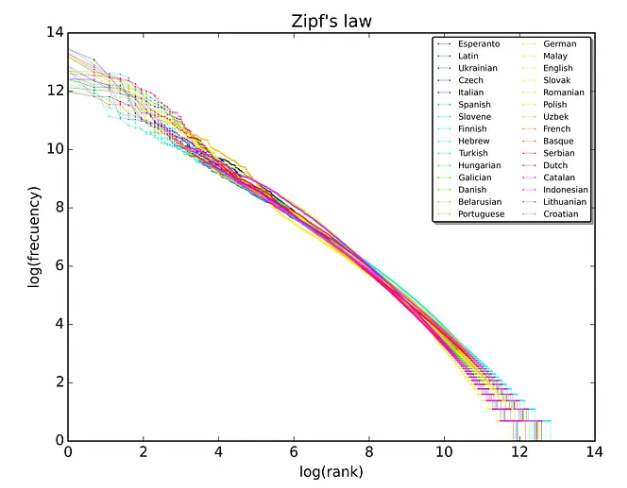

If you rank any writing in any language, you’ll find the most common word occurs around twice as often as the second most common word. Then three times as often as the third most common, and so on down the list.

This rule is completely universal (You’ve likely heard of its cousin, the Pareto Principle). If we rank all the words in all the languages on Wikipedia, it looks like this:

Source: Medium

We’ve even observed Zipf’s law in languages we haven’t been able to translate yet. Thankfully, this law is a great help for AI.

It’s this internal structure of language that makes AI seem so familiar — so human in its responses.

Language itself provides the stepping stones for AI to cross the chaotic river of human communication and seem so natural.

But again, AI isn’t thinking. It’s using math to follow a path… and that path can get rocky when we enter the world of finance.

The Achilles’ Heel of LLMs

Unlike the linear structure of language, finance is the wild west.

While sentences may be built on stable foundations of grammar that can persist for centuries, markets exist in flux.

Price movements don’t follow neat grammatical rules. They’re driven by new information, shifting sentiment, regulatory shocks… and occasionally pure mania.

Add to that a constant state of self-erasure and discovery that makes and destroys markets.

In this view, efficient market hypothesis isn’t a stuffy theory — it’s a messy, chaotic world that’s actively enforced by millions of participants hunting for ‘alpha’.

Predictable patterns in the stock market are like blood in the water. They attract predators who feed on them until nothing remains.

It’s this adversarial nature of markets, the endless messy competition, that keeps them fresh and somewhat balanced.

But it’s also why language-based pattern-matching AI can run into trouble.

It’s like trying to learn a game whose rules are changing because you knew them.

So, Can AI Still Help?

Yes, but not in the way most imagine. Don’t expect a ChatGPT to spit out ‘Buy Tesla tomorrow.’

For that, you need to look towards other forms of AI that operate outside the structure of language.

If that sounds like it’s up your alley, then expect an announcement from us soon.

We’re expanding how we think about, and use, AI here at Fat Tail. And we hope you’ll follow along for more.

For those who want to know what ChatGPT can do today, it can still shine in supporting roles. That’s things like:

- Digesting huge reams of news and business filings.

- Simulating scenarios to stress-test portfolios.

- Surfacing relationships between companies, sectors, and events that humans might overlook.

Think of it less as an oracle, more as a turbocharged research assistant.

The idea of a single AI cracking markets is seductive — but misplaced.

Markets are chaotic because they are constantly adapting. That’s what keeps them alive, and that’s why ‘next-token prediction’ isn’t enough.

AI will absolutely change investing. But its edge will come from augmentation, not clairvoyance.

The winners won’t be those who expect machines to replace human judgment.

They’ll be those who learn to combine AI’s brute-force synthesis with human traders’ creativity and risk sense.

Regards,

Charlie Ormond,

Small-Cap Systems and Altucher’s Investment Network Australia

Comments